ESnet-JLab FPGA Accelerated Transport (EJFAT) Load Balancer

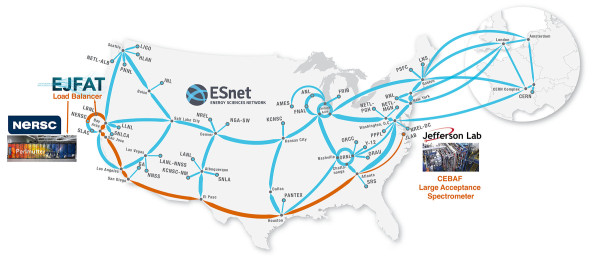

A collaboration between staff at Energy Sciences Network (ESnet) and Thomas Jefferson National Accelerator Facility (Jefferson Lab), the ESnet-JLab FPGA Accelerated Transport, or EJFAT (pronounced “Edge-Fat”), prototype is designed to seamlessly integrate edge and cluster computing in order to allow data from multiple types of scientific instruments to be streamed and processed in near real time by multiple HPC facilities — and, if needed, to redirect those data streams dynamically.

Watch Vardan Gyurjyan (Jefferson Lab)'s talk on YouTube, "EJFAT for Nuclear Physics Data Processing: High-Throughput Real-Time Data-Stream Orchestration for a Distributed Workflow Spanning Multiple Facilities at Continental Scale," recorded February 7, 2025:

Interested in seeing a demo of EJFAT? Email engage@es.net

BACKGROUND

In April 2024, the EJFAT team successfully streamed nuclear physics data from Jefferson Lab in Newport News, Virginia, at 100 gigabits per second (Gbps) thousands of miles across the ESnet6 network backbone, directly into the Perlmutter supercomputer operated by the National Energy Research Supercomputing Center (NERSC) in Berkeley, California. The data was massively processed on 40 Perlmutter nodes (more than 10,000 cores) and results streamed back to Jefferson Lab in real time for validation, persistence, and final physics analysis. This milestone was achieved without needing any buffering or temporal storage and without experiencing any significant data loss or latency issues. (Read "California Streamin’: Jefferson Lab, ESnet Achieve Coast-to-Coast Feed of Real-Time Physics Data.")

In April 2024, the EJFAT team successfully streamed nuclear physics data from Jefferson Lab in Newport News, Virginia, at 100 gigabits per second (Gbps) thousands of miles across the ESnet6 network backbone, directly into the Perlmutter supercomputer operated by the National Energy Research Supercomputing Center (NERSC) in Berkeley, California. The data was massively processed on 40 Perlmutter nodes (more than 10,000 cores) and results streamed back to Jefferson Lab in real time for validation, persistence, and final physics analysis. This milestone was achieved without needing any buffering or temporal storage and without experiencing any significant data loss or latency issues. (Read "California Streamin’: Jefferson Lab, ESnet Achieve Coast-to-Coast Feed of Real-Time Physics Data.")

Within the DOE Office of Science’s programs, there are a large number of comparable instruments on which the EJFAT prototype may be able to run, enabling real time streaming to and processing by multiple HPC facilities.

The Impact

Particle accelerators, X-ray light sources, electron microscopes, and other facilities with time-sensitive workflows are fitted with numerous high-speed A/D data acquisition systems (DAQs) that can produce multiple 100 Gbps data streams for recording and processing. Most accelerators and similar instruments lean by design toward doing data reduction and event identification while avoiding writing any raw data to disk, which has led to DAQs tightly coupled with large banks of CNs at the accelerator site. This can lead to inefficient placement of CNs, which are each individually sized for each accelerator, but used only when the accelerator is active.

With data-stream in-network processing like EJFAT offers, raw data travels in one direction and the (typically smaller) processed data returns in real time without the need for disk storage or buffering. This method requires no trigger and allows continuous data acquisition, filtering, and analysis. Rather than fetching water bucket by bucket, it’s more like turning on the bandwidth firehose and spraying at full power.

The Technology Behind the Prototype

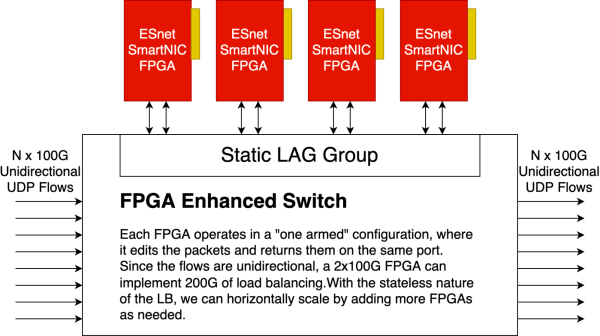

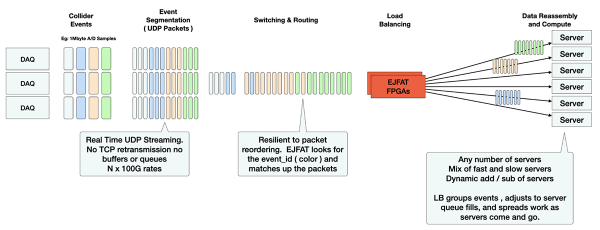

EJFAT is a real-time load balancer for distributing UDP-encapsulated DAQ payloads into a dynamically allocated set of compute elements. EJFAT comprises software loaded onto a custom ESnet SmartNIC, a programmable network interface card that uses a field-programmable gate array (FPGA) hardware platform. The device easily handles networking problems that don’t fit into the switch or router stereotype.

EJFAT connects sources of streaming data (the DAQs) to the compute nodes (CNs), but unlike a router, it acts as a “data broker,” decoupling the knowledge of which compute node the source stream should be aimed at. All DAQs send their data to a single well-known address for the EJFAT load balancer; it in turn maintains an inventory of available compute resources and distributes the work across the CNs while grouping related pieces of information and sending those groups to single CNs.

Diagram showing packet processing using ESnet SmartNIC built on AMD-Xilinx Alveo cards.

EJFAT is thus designed to move what is usually front-end processing across a Wide Area Network to a shared pool of resources, by distributing event data to several CNs in order to process events in parallel on a cluster of servers at high-performance computing (HPC) centers. The load balancer suite consists of an FPGA executing a dynamically configurable, low-fixed-latency data plane, featuring real-time packet redirection at high throughput, and a control plane running on the FPGA host computer that monitors network and compute-farm telemetry in order to make dynamic decisions for destination compute host redirection and load balancing.

In essence, EJFAT acts as a lightning-speed traffic controller and packet cannon. It can identify and direct incoming data from multiple events on the fly, within nanoseconds, to their designated network paths — which may lead them to computing servers thousands of miles away. The data-acquisition systems provide an event identification number as part of a special header field incorporated just after the UDP header. EJFAT is able to parse this field and send all events with the same event ID to a single unique server that it selects from the pool of registered servers. These servers will likely finish their work at slightly different rates, so EJFAT will increase or decrease the amount of work it assigns to a server, based on its pending work queue.

EJFAT performs dynamic load balancing by coherent grouping of events and dynamic assignment of work to Compute Nodes

EJFAT’s packet-forwarding protocol performs stateless forwarding: its FPGAs can forward a given packet without relying on any further context or state, meaning parallel FPGAs can be added for envelope-free scaling in increments of 100 Gbps. Need more compute power? Core compute hosts can be added independently of the number of source DAQs. It also accommodates a flexible number of CPUs and threads per host, treating each receiving thread as an independent load-balancing destination.

For more details, see the March 2023 paper, “EJFAT Joint ESnet JLab FPGA Accelerated Transport Load Balancer.”

What’s Next

The ESnet-Jefferson Lab project team is working with researchers at the Advanced Light Source at Berkeley Laboratory, the Advanced Photon Source at Argonne National Laboratory, and the Facility for Rare Isotope Beams at Michigan State University to develop a software plugin adapter that can be installed in streaming workflows for access to compute resources at NERSC, the Oak Ridge Leadership Computing Facility, and the HPDF testbed.

ESnet EJFAT Project Team:

- CTO Chin Guok

- ESnet Planning & Innovation Consulting Scientists Yatish Kumar and Stacey Sheldon

- Software Engineer Derek Howard

Interested in seeing a demo of EJFAT? Email engage@es.net!