In-Network Caching Shown to Enhance Science Community's Access to Experimental Data

ESnet researchers were part of a trial that demonstrated speed and reliability of this data-sharing network model

Contact: cscomms@lbl.gov

Researchers from the Energy Sciences Network (ESnet) and Lawrence Berkeley National Laboratory's (Berkeley Lab's) Computational Research Division are part of a team that has successfully tested a model for a data-sharing network that could dramatically improve the time it takes to access experimental scientific data.

The collaboration, which also included researchers from UC Berkeley, Middlebury College, and UC San Diego, ran an in-network caching trial that demonstrated how a network of servers located within a 500-mile region could provide scientists with experimental data faster and more reliably than a single overseas connection.

The trial confirmed that a network of data servers running 500 miles down the coast of California could not only provide researchers with experimental data in a timely and reliable manner, but could do so while dramatically reducing the stress put on long-distance networks.

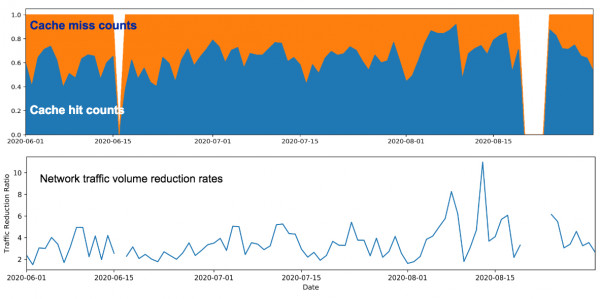

The top graph shows the proportion of cache hits versus cache misses, which demonstrates how well the cache is working to reduce the number of data transfers, saving 2.6 million transfers and reducing the average network demand frequency by 2.6. The bottom plot shows the changes in network traffic volume reduction rates over time, indicating that the cache saved 2.17PB, with an average reduction in network traffic volume of 2.9096.

Born from LHC demands

The in-network caching project was hatched out of a need to adapt to demands posed by the Large Hadron Collider (LHC) at CERN, where data generated by the facility’s large-scale particle physics ATLAS and CMS experiments is transmitted to supercomputer sites in the U.S., such as the National Energy Research Scientific Computing Center (NERSC) at Berkeley Lab, for processing. The data is then distributed to researchers around the world for analysis. The caching project links servers in Sunnyvale, San Diego, and Pasadena to create a local source of ATLAS and CMS experiment data that can be accessed without tasking the Transatlantic connection.

When CERN decided to upgrade the LHC to a “high luminosity” state that would allow the collider to collect larger amounts of collision data, potentially paving the way for new breakthroughs in physics, ESnet was tasked with handling exponentially more raw data.

The finely detailed reports will produce up to six times more data that will need to be processed and analyzed. The caching project team realized that the surge of network traffic was going to tax DOE-managed transatlantic cables as researchers on both continents sought to analyze the data.

“Transatlantic is significantly more expensive [in terms of monetary costs and network latency] than any of the terrestrial bandwidth,” explained Chin Guok, Planning and Architecture Group Lead at ESnet.

To address this transatlantic traffic issue, it was proposed that strategically placed storage servers, called XCache, acting as regional caches could serve experimental data to local university and government researchers reducing the need for long-distance or overseas connections. When a user requested a specific set of data, the system would first check if the data was already in its cache and, if not, pull the data from the source and then add it to the cache for other users to access.

From Sunnyvale to San Diego

The regional caching service ran roughly 500 miles from an ESnet hub in Sunnyvale, Calif. down to the UC San Diego (UCSD) campus, with additional service nodes at the Caltech campus in Pasadena, Calif. In total, it consisted of 14 server nodes ranging in storage capacity from 24TB to 180TB. The dataset consisted of LHC data.

The researchers monitored not only uptime and round trip time but also gauged the rate of cache hits (instances where the requested data was stored within the cache) and cache misses (times when the data had to be pulled from another primary source.)

Over the course of the six-month trial, results were encouraging. The team found that the hit rate over time did increase, and that in many cases popular datasets were served locally, often to one researcher or to a specific set of researchers.

Having this data at a nearby facility resulted in lower access times (a 10-millisecond average was recorded throughout the study) and also reduced the number of queries sent over the long-distance network.

“When they are asking for data from Chicago to San Diego, it has to hop over several places within the network,” explained Alex Sim, senior computing engineer in Berkeley Lab’s Computational Research Division and lead researcher on the in-network caching trial. “If we have the in-network cache in Sunnyvale near where the data is going, access to the data has less delay.”

That the pilot regional caching service covered a roughly 500-mile distance is not an accident. When UCSD researchers first presented the idea of the research caches, they observed that many of the countries in continental Europe, as well as the UK, stretched around 500 miles from border to border. Should the in-network caching prove viable, each nation would be able to link up its own regional cache with the ability to serve data to all of its national universities and major research centers locally.

This not only is a socio-politically relevant distance scale, said Sim, but also ensures speedy access times as data stays on the same national infrastructure.

“If the data access time is more than 10 milliseconds, it affects the performance,” he explained.

Europe is not the only region where the 500-mile distance is key. Some major population belts in the U.S., such as the corridor from Washington, DC to Boston, also cover around the same distance, meaning in-network caches in those areas would likewise be able to provide similar access speed and reliability.

Next Steps

Looking ahead, the team would like to analyze data from the experiment to gauge re-transmission, or how many times a set of data was repeatedly accessed and transmitted. There is also an effort to work out what the optimal size is for a cache, something that will allow the data to be held for longer periods of time while it is still of interest to users.

Further down the road, Sim said they hope to expand the project to other research efforts. He describes the vision as a sort of “Netflix for research,” where data can be pulled on demand efficiently and reliably without concern as to where it is hosted.

“If the dataset is not large and accessed often enough, the in-network caching service can hold semipermanently depending on the caching policy,” said Sim.

ESnet is a U.S. Department of Energy Office of Science user facility.