DIII-D National Fusion Facility, NERSC, AMCR, and ESnet Collaboration Speeds Nuclear Fusion Research

By Elizabeth Ball (NERSC), Bonnie Powell (ESnet), and Lindsay Ward-Kavanagh (DIII-D)

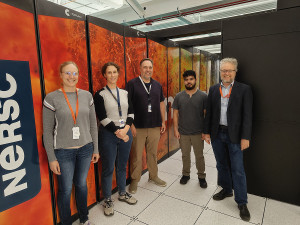

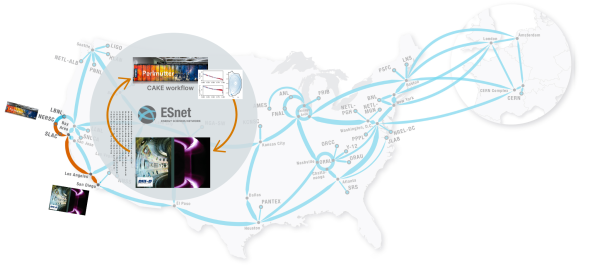

The Superfacility model connecting DIII-D and NERSC via ESnet enabled DIII-D to send fusion experiment data to NERSC’s Perlmutter supercomputer for large-scale automated analysis and high-fidelity reconstruction of plasma pulses.

As the global population continues to grow, demand for energy will also increase. One potentially transformative way of meeting that demand is by generating energy using nuclear fusion, which does not emit carbon or consume scarce natural resources and could therefore present a solution that is both equitable and ecologically sound. Although major scientific, technological, and workforce development hurdles remain before energy produced by nuclear fusion is widely available, U.S. Department of Energy (DOE)-funded researchers at the DIII-D National Fusion Facility, the National Energy Research Scientific Computing Center (NERSC), Lawrence Berkeley National Laboratory’s Applied Mathematics and Computational Research (AMCR) Division, and the Energy Sciences Network (ESnet) are teaming up to bring that vision closer to reality.

This new collaboration leverages high-performance computing (HPC) at NERSC, DOE’s high-speed data network (ESnet), AMCR’s performance optimization capabilities, and DIII-D’s rich diagnostic suite to make important data from fusion experiments more useful and available to the global fusion research community, helping to accelerate the realization of fusion energy production.

Data + High-Performance Networking + High-Speed Computing = Breakthroughs

At DIII-D, researchers perform experiments to understand how to harness nuclear fusion energy as a viable energy source. Making the most of these experimental resources requires rapid processing of massive amounts of experimental data – a challenge well-suited to HPC facilities. To meet this challenge, a multi-institutional team from DIII-D, AMCR, NERSC, and ESnet collaborated to develop a “Superfacility”: a multi-institution scientific environment composed of experimental resources at DIII-D and HPC resources at NERSC interconnected via ESnet6, the latest iteration of ESnet’s dedicated high-speed network for science.

“To achieve the goal of fusion, all available resources, including HPC, need to be applied to the analysis of present fusion experiments to be able to extrapolate to future fusion power plants,” commented Sterling Smith, the project lead for the DIII-D team involved in the Superfacility effort. “The use of HPC accelerates advanced analyses so that they can feed into the scientific understanding of fusion and point us toward the solutions needed to realize fusion energy production.”

The Superfacility model harmonizes with the larger DOE vision of an Integrated Research Infrastructure (known as IRI; see report). It enables near-real-time analysis of massive quantities of data during experiments, allowing tailoring of the experimental process and accelerating the pace of experimental science.

“We have long recognized that all experimental science teams need a better way to connect experiment facilities with high-speed networks and HPC,” said NERSC Data Department Head Debbie Bard, who leads the broader Superfacility work at Lawrence Berkeley National Laboratory (Berkeley Lab). “We started the Superfacility work at Berkeley Lab as a broad initiative to develop the tools, infrastructure, and policies to enable these connections. DIII-D, AMCR, and ESnet have been key partners in this work.”

Added Raffi Nazikian, head of ITER research and Senior Director at General Atomics (host of the DIII-D National Fusion Facility), “We see the Superfacility concept, and the emerging Integrated Research Infrastructure under the DOE Office of Advanced Scientific Computing Research, as a transformative capability for fusion research and look forward to exploring its full potential, beginning with the DIII-D/NERSC Superfacility.”

Making every shot count

The device at the heart of the DIII-D National Fusion Facility, the DIII-D tokamak, creates plasmas. In the tokamak, gas atoms are heated to temperatures hotter than the Sun, which causes them to disintegrate into their component electrons and nuclei. The free nuclei may crash into each other and fuse, releasing energy. During experimental sessions, the research team studies the behavior of short plasma discharges called shots, typically performed at 10- to 15-minute intervals with nearly 100 diagnostic and instrumentation systems capturing gigabytes of data during each shot. Between shots, scientists have a brief time to address any issues or evaluate how specific parameter settings affect plasma behavior.

Previously, making adjustments between shots required extensive manual calculations by subject-matter experts, a time- and labor-intensive process that still offered limited information. Automating some of this work has always been a potential solution, but the computational needs of experiments at DIII-D were greater than could be addressed using standard computing systems and gaining access to a separate supercomputing center on an individual scientist basis tended to be a prohibitively difficult and time-consuming process.

“While DIII-D has automated rapid data processing performed on local computing systems to provide near-real-time feedback to scientists to inform experimental decision-making, over the years, the fidelity of models and understanding of the physics has increased dramatically,” said David Schissel, DIII-D Computer Systems and Science Coordinator. “Experiments now require higher-resolution, higher-fidelity analyses that cannot be completed on our local systems. The ability to perform this much more detailed analysis in near-real time to inform control room decision-making is possible only through the Superfacility model, which will allow researchers to make better adjustments and learn more from their experiments.”

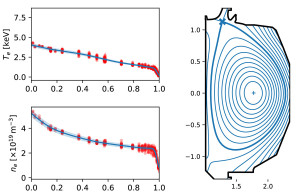

The technical process of establishing the Superfacility model connecting DIII-D and NERSC via ESnet began with coordinating code: first establishing that the EFIT code used at DIII-D to calculate the device’s equilibrium magnetic field profile would run well on NERSC’s Perlmutter supercomputer. With that established, the combined team adopted the Consistent Automated Kinetic Equilibria (CAKE) workflow, developed by Prof. Egemen Kolemen’s team at Princeton University for DIII-D, to bring together all the “ingredients” provided by separate analysis and modeling codes to produce full descriptions of plasma behavior in the DIII-D tokamak. By monitoring and adjusting the CAKE workflow for optimal use on the Perlmutter system, the Superfacility team was able to decrease the time-to-solution by 80%, from 60 minutes to 11 minutes for a benchmark case.

“DOE has invested in establishing DIII-D as the best-diagnosed fusion facility in the world. However, large-scale automated analysis, using tools like CAKE, is the only way to convert all the diagnostic data into useful information; the Superfacility team is taking a key step toward the future of fusion," said Prof. Egemen Kolemen.

Data collected by DIII-D diagnostics (left) & associated magnetic field reconstruction created using DIII-D/NERSC Superfacility resources.

The increase in speed made it possible to complete these analyses and additional follow-on analysis between DIII-D experimental shots. Between 2008 and 2022, before DIII-D had real-time access to HPC, only 4,000 hand-made reconstructions were produced. In the first six months of the DIII-D/NERSC Superfacility operation, DIII-D achieved more than 20,000 automated high-resolution magnetic field profile reconstructions for 555 DIII-D shots.

The results are now part of a database of high-fidelity results that all DIII-D users have access to and can use to inform experimental planning and interpretation – a cache of information that will benefit the push for fusion energy worldwide.

“DIII-D (and fusion more generally) presents a use-case where returning results quickly really matters,” said Laurie Stephey, a member of the NERSC team. “Many types of fusion simulation and data analysis are too computationally demanding to be run on local resources between shots, so this often means that these analyses either never get done, or if they do get done, they are often finished too late to be actionable. This Superfacility project combines DIII-D and HPC resources to produce something greater than the sum of their parts – just-in-time scientific results that would otherwise not be possible.”

Democratizing access to HPC resources

The success of the DIII-D/NERSC Superfacility model is a victory for fusion energy research today, and it may also be a template for expanding the use of Superfacility and other IRI collaborations across the DOE lab complex. The DIII-D team is also working with staff at the Argonne Leadership Computing Facility to analyze plasma pulses quickly, again using ESnet but with a different approach to the workflow. .

“ESnet is actively exploring ways to improve network performance for the DIII-D/NERSC Superfacility and other collaborations. As the workflow matures, we anticipate being able to deploy advanced ESnet services that will enable the project to easily expand into multiple computing environments at multiple HPC facilities, in a performant and scientist-friendly way,” said ESnet Science Engagement Team network engineer Eli Dart.

In addition to improved science outcomes, the Superfacility model can help make the experimental process more equitable and inclusive for a broader range of researchers. Providing HPC access to all team researchers without requiring them to acquire their own allocation of compute time allows newer groups and researchers to participate in experimental sessions, as well as connecting all members of experimental teams. Additionally, the physically distributed nature of the Superfacility lowers barriers to entry for subject-matter experts who may be needed for data analysis during experiments. This may make it easier for early-career researchers with smaller professional networks to collaborate with these experts in their research projects. Overall, these changes contribute to a more inclusive environment for all researchers and builds a fusion workforce from a wider cross-section of experts.

Funded by both Fusion Energy Sciences and ASCR, this Superfacility collaboration successfully demonstrates the value in combining experimental scientific simulations with compute resources leading to solutions needed to realize fusion energy production.